Scientific papers

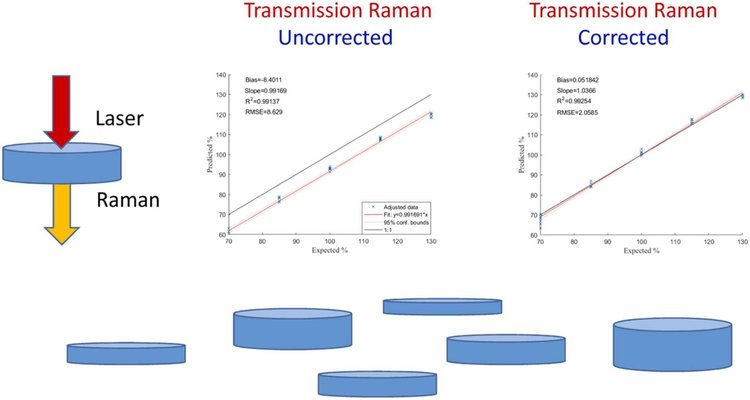

Transmission Raman Spectroscopy (TRS) is a widely used analytical method for assessing content uniformity in pharmaceutical products, enabling quantification of both active pharmaceutical ingredients (APIs) and excipients in the final dosage form. During manufacturing, these products are exposed to various physical and chemical stressors—such as variations in compaction force and tablet thickness—which can influence the internal optical path of Raman photons. These changes may lead to differential attenuation of the Raman signal due to near-infrared absorption, resulting in spectral distortions.

Such distortions can carry over into quantitative models, introducing systematic prediction errors. In this study, we examined the effects of tablet thickness, porosity, and compaction force on the accuracy of TRS-based quantitative models. To address these effects, we implemented a basic spectral standardization technique aimed at correcting the distortions.

Our results showed clear improvements in model performance: the root mean square error (RMSE) for the full calibration set decreased from 2.5% to 2.0%, and the systematic bias between tablets with extreme compaction values was nearly eliminated (from 8.40% to approximately 0%). This was accompanied by a significant reduction in residual errors (RMSE reduced from 8.63% to 2.06%).

Comments

No comments posted yet.

Add a comment